Lecture 20: Multiple Testing#

STATS 60 / STATS 160 / PSYCH 10

Concepts and Learning Goals:

False positive & False negative rates

Significance level of a test

Multiple testing: testing multiple hypotheses at once

\(p\)-hacking

Family-wise error rate

Bonferroni correction

Hypothesis testing recap#

We gather data and seem to observe a trend. How likely is it that this trend is real? Could it just be the result of random noise?

Hypothesis testing paradigm:

State a null hypothesis:

A model for how the data could have been generated if it was just random noise

Calculate a \(p\)-value:

The probability of seeing an outcome/trend at least as extreme as ours, if the data was just random noise (if the null hypothesis were true).

Decide if you should reject the null hypothesis.

Choose a level \(\alpha\) at which to reject the null hypothesis, say \(\alpha = 0.05\).

If the \(p\)-value is smaller than \(\alpha\), we “reject the null hypothesis.”

If the data really was random noise, we’d expect to see something like our outcome \(\le \alpha\) fraction of the time.

False positives#

Suppose I classify Monday’s \(8\) images as AI or not by randomly guessing.

If I get lucky and guess \(7\) or more correct, then my \(p\)-value will be \(< 5\%\).

import math

for t in range(9):

print("p-value for >=",t," correct guesses is ", sum([math.comb(8,k) * (1/2)**(8) for k in range(t,9)]))

p-value for >= 0 correct guesses is 1.0

p-value for >= 1 correct guesses is 0.99609375

p-value for >= 2 correct guesses is 0.96484375

p-value for >= 3 correct guesses is 0.85546875

p-value for >= 4 correct guesses is 0.63671875

p-value for >= 5 correct guesses is 0.36328125

p-value for >= 6 correct guesses is 0.14453125

p-value for >= 7 correct guesses is 0.03515625

p-value for >= 8 correct guesses is 0.00390625

What if I make a lot of repeat attempts?

In 1000 attempts, I expect to get 7 or more correct about 30 times!

from scipy.stats import binom

num_lucky = sum([1 for _ in range(1000) if binom.rvs(n=8, p=0.5, size=1) >= 7])

print("The number of lucky attempts was ",num_lucky," out of 1000")

The number of lucky attempts was 30 out of 1000

In my hypothesis test, this would cause a false positive: we falsely conclude that I am probably good at deciding if images are AI/not.

Questions#

Suppose our data really is just random noise (the null hypothesis is true), but we perform the same experiment \(100\) times.

If we set our level for rejecting the null hypothesis at \(\alpha = 0.05 = \frac{1}{20}\), how many times would we expect to end up rejecting the null hypothesis?

In other words, out of 100 trials, how many false positives do we expect to see?

Answer: we expect \(100 \cdot \alpha = \frac{100}{20} = 5\) false positives.

Is the \(p\)-value a random quantity?

Remember that the \(p\)-value is the chance we saw our data or something more extreme, under the null hypothesis.

Answer: yes, it is a random quantity.

False positives#

We observed a seeming trend, and performed a hypothesis test.

A false positive occurs when the null hypothesis was secretly true, but based on the outcome of our hypothesis test, we decide that the trend is likely real.

This happens because:

There is variability in our data (it comes from a random sample)

Hence, there is variability in our \(p\)-value (the \(p\)-value is a function of the data)

Even if the data is generated according to the null hypothesis, we’ll see data that causes a very small \(p\)-value some of the time.

Significance level#

The level or significance level \(\alpha\) at which we reject the null hypothesis controls the fraction of false positives.

The level is the threshold \(\alpha\) that we set for the \(p\)-value:

If the \(p\)-value is less than \(\alpha\), we reject the null hypothesis.

When we design our test, we choose the level. A common choice of level is \(\alpha = 0.05\).

Question: If you increase the level, will the fraction of false positives increase or decrease?

If the level is \(\alpha\), we expect to get an \(\alpha\)-fraction of false positives.

Question: In scientific publication, it is standard to require the level \(\alpha = 0.05\). What percent of published statistically significant trends do you expect are actually random noise?

It could be as high as \(5\% = 1/20\)! Because \(5\%\) is the level required for publication.

Question: Why shouldn’t I just set my level \(\alpha = 1/100000\) or even \(\alpha = 0\)? Then I will never get a false positive.

A small level \(\alpha\) means we extremely skeptical that any trend we observe is statistically significant.

If we setting the level too small we’ll get a lot of false negatives.

If \(\alpha = 0\), we will literally never reject the null hypothesis.

Multiple testing#

Can you think of any situations where you would naturally want to do a lot of different experiments in parallel?

A biology/physics/chemistry lab has multiple experiments going at once

A pharmaceutical company might be developing multiple drugs at once

A company might be testing out multiple versions of the same product

Many different contestants trying to guess something (like a lottery number)

If we do a lot of experiments in parallel, the chances of getting at least one false positive increase.

Are you psychic?#

](https://tselilschramm.org/introstats/figures/psychic-qr.png)

[Psychic Science

Choose the following procedure:

25 cards

Clairvoyance

Open deck

Cards seen

How many did you get right?

Hypothesis testing#

Do you have ESP? Formulate a hypothesis test.

What is your null hypothesis?

What is the \(p\)-value (in plain English)? How would you compute it?

The ESP applet gave you a \(p\)-value. Do you reject the null hypothesis?

Class data#

Psychics in STATS 60? {.smaller}#

Do you believe that there are psychics among us?

In a class of 50 with no psychics, we would expect \(.05 \cdot 50 = 2.5\) False Positives.

In fact, we can model the number of False positives using coinflips!

The number of False Positives is like the number of heads we get if we flip \(n = 50\) coins, each with heads probability \(0.05\).

Question: what is the chance that, as a class of \(n = 50\), we get at least one false positive?

Using the probability for coinflips, it should be $\(1 - \Pr[ 0 \text{ heads}] = 1 - \binom{50}{0} \left(0.95\right)^{50} \approx 0.92\)$

The “overall” False positive rate is over 90%!

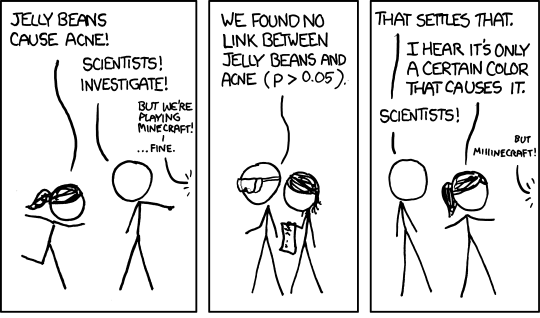

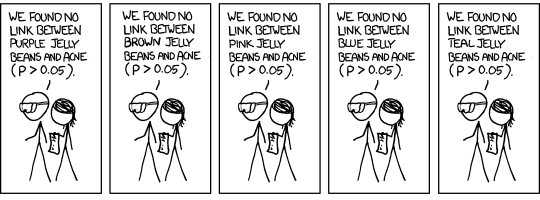

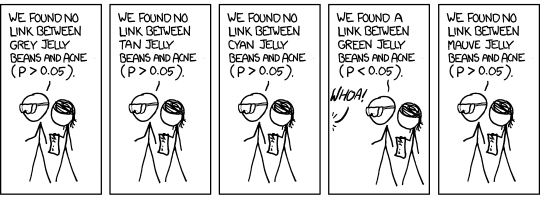

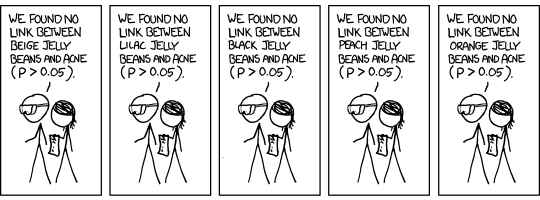

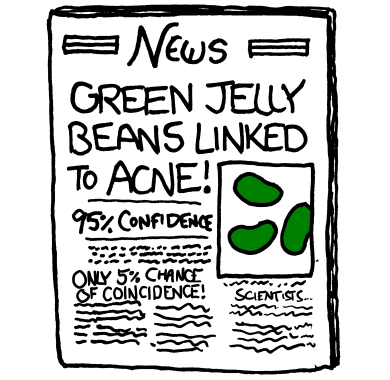

Comic Relief#

#

This comic comes from xkcd.

#

#

#

Multiple Testing#

What to do when you want to test multiple hypotheses

Multiple Testing#

In both examples today, we were testing multiple hypotheses:

whether each person in the room has ESP (about 30 tests)

whether each color of jellybean causes acne (20 tests)

If we do \(m > 1\) hypothesis tests, each at level-\(\alpha\), then the “overall” probability of having a false positive is larger than \(\alpha\).

This can lead to (accidental) “\(p\)-hacking,” a phenomenon wherein the \(p\)-value of a scientific experiment inaccurately describes its true null probability.

Usually caused when scientists try to test multiple hypotheses at once, and fail to account for it in their statistical analysis.

This “overall” probability is called the family-wise error rate.

What if we wanted to guarantee that \(\text{FWER} \leq \alpha\)?

Bonferroni Correction#

Instead of testing each of the \(m\) hypotheses at level \(\alpha\), test each hypothesis at level \(\frac{\alpha}{m}\).

Why Does This Work?#

Suppose the null hypothesis is true in \(m_0\) of the \(m\) hypotheses.

Applying the Bonferroni Correction#

If we do 64 tests for ESP, then the Bonferroni correction says:

If you want to guarantee that the FWER is less than \(.05\),

test each hypothesis at level \(\frac{.05}{64} \approx .0008\).

Is anyone still psychic now?

The Dark Side of Bonferroni#

The Bonferroni correction makes it much harder to reject the null hypothesis.

This keeps the false positive rate under control.

But if we don’t reject the null hypothesis, we risk having a lot of false negatives.

In general, the Bonferroni correction increases the false negative rate.

So if there is a psychic among us, we are less likely to discover them.

Recap#

The significance level of a test controls the false positive rate

If we perform a test of level \(\alpha\), we expect false positives an \(\alpha\)-fraction of the time

If we make the level super small, we’ll get a lot of false negatives.

Multiple testing: when we want to test many hypotheses at once

The family-wise error rate is the chance of at least one false positive

\(p\)-hacking

Bonferroni correction for multiple testing